AI Toolkit

Learn how to add the AI Toolkit chatbot to your Flutter application.

Hello and welcome to the Flutter AI Toolkit!

The AI Toolkit is a set of AI chat-related widgets that make it easy to add an AI chat window to your Flutter app. The AI Toolkit is organized around an abstract LLM provider API to make it easy to swap out the LLM provider that you'd like your chat provider to use. Out of the box, it comes with support for two LLM provider integrations: Google Gemini AI and Firebase Vertex AI.

Key features

#- Multi-turn chat: Maintains context across multiple interactions.

- Streaming responses: Displays AI responses in real-time as they are generated.

- Rich text display: Supports formatted text in chat messages.

- Voice input: Allows users to input prompts using speech.

- Multimedia attachments: Enables sending and receiving various media types.

- Custom styling: Offers extensive customization to match your app's design.

- Chat serialization/deserialization: Store and retrieve conversations between app sessions.

- Custom response widgets: Introduce specialized UI components to present LLM responses.

- Pluggable LLM support: Implement a simple interface to plug in your own LLM.

- Cross-platform support: Compatible with Android, iOS, web, and macOS platforms.

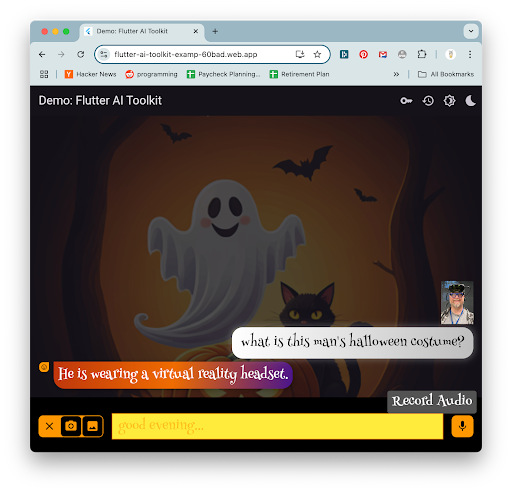

Online Demo

#Here's the online demo hosting the AI Toolkit:

The source code for this demo is available in the repo on GitHub.

Or, you can open it in Firebase Studio, Google's full-stack AI workspace and IDE that runs in the cloud:

Get started

#- Installation

Add the following dependencies to your

pubspec.yamlfile:yamldependencies: flutter_ai_toolkit: ^latest_version google_generative_ai: ^latest_version # you might choose to use Gemini, firebase_core: ^latest_version # or Vertex AI or both - Gemini AI configuration

The toolkit supports both Google Gemini AI and Firebase Vertex AI as LLM providers. To use Google Gemini AI, obtain an API key from Gemini AI Studio. Be careful not to check this key into your source code repository to prevent unauthorized access.

You'll also need to choose a specific Gemini model name to use in creating an instance of the Gemini model. The following example uses

gemini-2.0-flash, but you can choose from an ever-expanding set of models.dartimport 'package:google_generative_ai/google_generative_ai.dart'; import 'package:flutter_ai_toolkit/flutter_ai_toolkit.dart'; // ... app stuff here class ChatPage extends StatelessWidget { const ChatPage({super.key}); @override Widget build(BuildContext context) => Scaffold( appBar: AppBar(title: const Text(App.title)), body: LlmChatView( provider: GeminiProvider( model: GenerativeModel( model: 'gemini-2.0-flash', apiKey: 'GEMINI-API-KEY', ), ), ), ); }The

GenerativeModelclass comes from thegoogle_generative_aipackage. The AI Toolkit builds on top of this package with theGeminiProvider, which plugs Gemini AI into theLlmChatView, the top-level widget that provides an LLM-based chat conversation with your users.For a complete example, check out

gemini.darton GitHub. - Vertex AI configuration

While Gemini AI is useful for quick prototyping, the recommended solution for production apps is Vertex AI in Firebase. This eliminates the need for an API key in your client app and replaces it with a more secure Firebase project. To use Vertex AI in your project, follow the steps described in the Get started with the Gemini API using the Vertex AI in Firebase SDKs docs.

Once that's complete, integrate the new Firebase project into your Flutter app using the

flutterfire CLItool, as described in the Add Firebase to your Flutter app docs.After following these instructions, you're ready to use Firebase Vertex AI in your Flutter app. Start by initializing Firebase:

dartimport 'package:firebase_core/firebase_core.dart'; import 'package:firebase_vertexai/firebase_vertexai.dart'; import 'package:flutter_ai_toolkit/flutter_ai_toolkit.dart'; // ... other imports import 'firebase_options.dart'; // from `flutterfire config` void main() async { WidgetsFlutterBinding.ensureInitialized(); await Firebase.initializeApp(options: DefaultFirebaseOptions.currentPlatform); runApp(const App()); } // ...app stuff hereWith Firebase properly initialized in your Flutter app, you're now ready to create an instance of the Vertex provider:

dartclass ChatPage extends StatelessWidget { const ChatPage({super.key}); @override Widget build(BuildContext context) => Scaffold( appBar: AppBar(title: const Text(App.title)), // create the chat view, passing in the Vertex provider body: LlmChatView( provider: VertexProvider( chatModel: FirebaseVertexAI.instance.generativeModel( model: 'gemini-2.0-flash', ), ), ), ); }The

FirebaseVertexAIclass comes from thefirebase_vertexaipackage. The AI Toolkit builds theVertexProviderclass to expose Vertex AI to theLlmChatView. Note that you provide a model name (you have several options from which to choose), but you do not provide an API key. All of that is handled as part of the Firebase project.For a complete example, check out vertex.dart on GitHub.

- Set up device permissions

To enable your users to take advantage of features like voice input and media attachments, ensure that your app has the necessary permissions:

Network access: To enable network access on macOS, add the following to your

*.entitlementsfiles:xml<plist version="1.0"> <dict> ... <key>com.apple.security.network.client</key> <true/> </dict> </plist>To enable network access on Android, ensure that your

AndroidManifest.xmlfile contains the following:xml<manifest xmlns:android="http://schemas.android.com/apk/res/android"> ... <uses-permission android:name="android.permission.INTERNET"/> </manifest>Microphone access: Configure according to the record package's permission setup instructions.

File selection: Follow the file_selector plugin's instructions.

Image selection: To take a picture on or select a picture from their device, refer to the image_picker plugin's installation instructions.

Web photo: To take a picture on the web, configure the app according to the camera plugin's setup instructions.

Examples

#

To execute the example apps in the repo,

you'll need to replace the example/lib/gemini_api_key.dart

and example/lib/firebase_options.dart files,

both of which are just placeholders. They're needed

to enable the example projects in the example/lib folder.

gemini_api_key.dart

Most of the example apps rely on a Gemini API key,

so for those to work, you'll need to plug your API key

in the example/lib/gemini_api_key.dart file.

You can get an API key in Gemini AI Studio.

firebase_options.dart

To use the Vertex AI example app,

place your Firebase configuration details

into the example/lib/firebase_options.dart file.

You can do this with the flutterfire CLI tool as described

in the Add Firebase to your Flutter app

docs

from within the example directory.

Feedback!

#Along the way, as you use this package, please log issues and feature requests as well as submit any code you'd like to contribute. We want your feedback and your contributions to ensure that the AI Toolkit is just as robust and useful as it can be for your real-world apps.

除非另有说明,本文档之所提及适用于 Flutter 3.38.1 版本。本页面最后更新时间:2025-11-4。查看文档源码 或者 为本页面内容提出建议。